Being intelligent is a superpower.

If you’re intelligent, the world opens up all kinds of doors for you. And if you are intelligent, you know exactly how to apply yourself when those opportunities do come up.

It’s a feedback loop that keeps on giving. And there’s value in having such a feedback loop in place.

The more intelligent you are, the more likely you are to add value to society. It just makes good economic sense.

But what happens if intelligence becomes commonplace?

Does it become yet another instance of “the tragedy of the commons?”

Let’s dive in.

Wait but what really is intelligence?

Intelligence is something that has not been defined in tangible terms. If we were to deduce patterns from human experiences, we would probably say one of 2 things: Either a person is born with intelligence or has acquired that intelligence.

Most people are born with some degree of intelligence. Be it analytical intelligence, creative intelligence, or social intelligence. The smart ones in there know how to leverage their specific intelligence to move ahead in the world.

And a large majority of these people keep polishing their skills so as to maintain their intelligence. After all, you gotta keep the blade nice and sharp. And people have to spend time and effort to maintain their intelligence.

In that sense, intelligence seems like a plant that must be carefully nurtured under the right conditions. Become complacent, and your talent/intelligence will leave you.

But what if you didn't have to water your plant each day? What if intelligence was as commonplace as electricity? A once-precious commodity that is now democratized. At scale.

Any technology when invented is created with the purpose to distribute its values at scale across the world.

Artificial Intelligence is no different.

AI’s big dream is to democratize intelligence - a noble goal indeed. But we might have to ask a few questions before doing that.

For example, how does democratizing intelligence factor into what it means to be human? How do you reconcile artificial intelligence with the spirit of a human being?

We are creators at heart and mind.

Humans are creators. Maybe not as sophisticated as the Lord in the Heavens but nonetheless humans are pretty effing good at creating.

Our entire existence is based on our ability to create things. Be it something as abstract as economics or something tangible like computers.

If we go a bit deeper, we’ll find that society is made up of systems - that are created in the shape of two concentric circles.

The outer circle is a biological one - systems put in place by nature. Example: We need water to live. Else we die. It’s pretty simple. But water is a scarce resource. So rules around its manufacture, production, and consumption needed to be made. A human made-system. That’s the inner circle.

And the human made-system is almost always a function of intelligence. Different perspectives come together, and different forms of intelligence come together to create value. To live in this illusion that we have created.

So when something so fundamental as intelligence begins to be scaled, what does it mean for society on the macro level? And what it does mean to be human on the micro-existential level?

When the first human systems were created (think early tribes), it would have been incredibly brave of them to venture out into the physical world. A world that they knew was rife with terrors. A world where death could sneak in at any time and kill any creative spirit that they might have had.

Take the invention of fire for example. It’s a beautiful example of the spirit of curiosity that guides humans to marvelous things. When the first man accidentally discovered that rubbing 2 stones together could create a spark, imagine how he would have felt.

Even more so because he did not know what the result could have been. In an alternate world, this simple rubbing of 2 stones together could have easily created something else. Maybe even something catastrophic. Of course, we now know that’s not possible but he wouldn't know that. He dared to venture into the unknown and find answers.

And that’s what creativity really is.

When I sit down to write my essays, I feel small. Is what I’m about to say even matter? What value will people get from this? What will Person X from ABC company think of me? All these things come up. But I gather the courage to sit down and write anyways. Go deep into my brain, connect different ideas and dare to put my thoughts into paper.

Being brave is a huge part of creating something meaningful. You have to be willing to bare yourself and venture into the unknown. Take that away, and it’s hard to even call something creation anymore. Yet that’s what AI tools claim in their copy/messaging - we help you create things.

But is it though?

Are we hiding behind the veil of creativity?

I wonder how convenient it would be if we could just type in a few words about an idea and the AI would just spin up some content. It would be so easy to reap the rewards of the content and claim ownership and feel pride over it.

Yet at the same time, feel somewhat safe and guilty. Knowing that we didn't really create that, did we? That’s why we get pissed off when someone else tries to pass off our work as theirs. Yet here we are doing the same thing with AI as we “create” our stories.

Now, I know most AI tools today aren't anywhere near replacing human writing. You still have to edit a lot of stuff. But if I were to bet, I’m guessing the AI scaling machine is going to automate the creation process. Where human intervention would no longer be needed.

Now the non-creator can also create.

For example, tools like Jasper, WriteSonic, Lex, Copy ai enable people to write things even if they’re not writers. Same with a lot of AI image-created apps built on top of Stable Diffusion.

This raises the question of ownership of the created art - who is the creator here? The person or the tool? Just because a person had some fledging idea would be enough to attribute ownership of the created work?

The United States Patent and Trademark Office (USPTO) ruled that AI systems cannot be listed or credited as inventors on a US patent. The decision stated that an “inventor” under current patent law can only be a “natural person.”

So it appears that even if the work of art is created by AI, the ownership would be attributed to the person owning the software.

Which raises these questions:

With AI, people are able to purchase intelligence like a commodity. So what does it mean for the person who can’t afford “this intelligence?”

Will he be able to get by on his own “individual intelligence” as he tries to compete against the global intelligence that is AI?

Does this then mean that intelligence then confined only to the elite? Which in turn ends up arming a small minority of people with global intelligence who were already pretty intelligent to begin with?

I’m not sure but the answer might not be so black and white. After all, the AI is only fed information about relevant patterns that are human-made.

The human would still have to make new patterns all the time for the AI to keep updating its “intelligence”. There’s still a way to go for the AI itself to create patterns, learn those patterns, and then predict results.

Hey we are social animals right?

A lot of intelligence is about communicating things. The way you communicate plays a huge role in your success. My trade writing copy for tech companies has taught me the value they put in communicating their product in just the right way.

The way you say something is more important than what you say. It’s the how not the what. And the “how” is the entire science behind consumer psychology. An entire field dedicated to understanding how to communicate things in the best way possible to make people want to engage with the brand and the product.

What happens when AI enters this equation?

Let’s take an example from consumer science itself. Let’s say you’ve won the Nobel Prize in Physics. And in your speech, you want to pitch your invention and its utility for commercial purposes.

Now let’s say before the Speech, you spoke with another fellow Nobel-winning laureate, Daniel Kahneman for lunch. He gives you a tip telling you to “make sure you say you were “lucky”- that will help you”.

If you acknowledge in your speech that your invention was in large part due to you being “lucky” and you actually use the word lucky (ideally many times over), you would end up convincing a good chunk of people to have a chat with you.

That bit of knowledge imparted by Daniel became yours to use and you applied it and got the rewards. And people would label you as “intelligent”. And it would be fair. After all, you did most of the work.

But what happens when AI enters the equation? The same thing but done at scale. Except it isn’t just one little tidbit - it’s everything known to mankind about how to persuade people to do things.

In essence, it would tell us things only that we want to hear. It would feel like a machine is manipulating us. A machine that wouldn't have existed without us.

This is not to say it’s wrong - far from it. It’s pretty efficient if you ask me. The AI is taking in information from all these sources combining the total intelligence of all humankind and creating words/content that it KNOWS will hit just the right chord. It’s the sum of global intelligence after all.

But on the recipient side, how would it feel as a consumer to be sold things by a machine? Humans enter into relationships with other humans. They do business with humans. Will adding the AI component have consequences for reducing human trust? Or is it something we’ll just get used to?

Most transactions today are automated and mechanical - adding a human touch lends some degree of meaning to the entire thing. That’s an issue I’ve written about in my previous essay on the problem of scalability.

So what does the future hold?

So what does all this mean? There are 2 consequences that I think will arise from this movement. The first: AI won't be taking over human jobs any time soon.

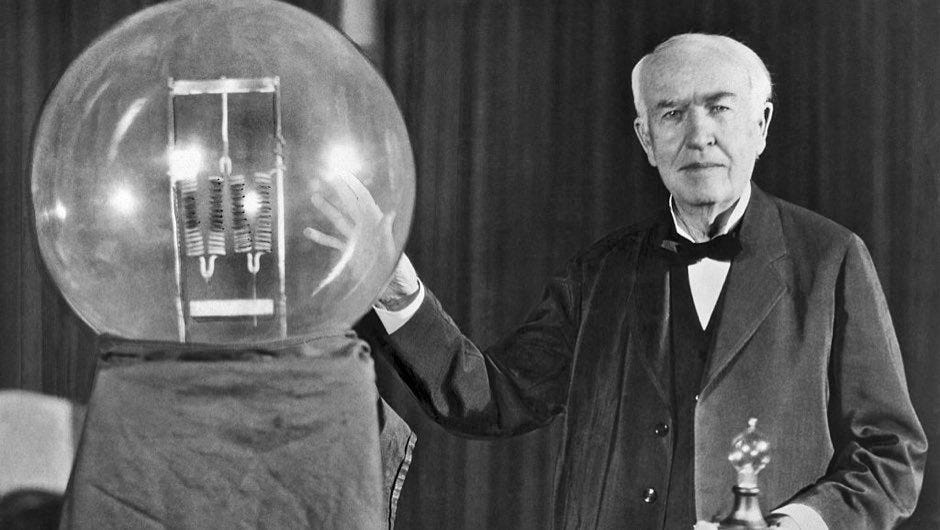

I reckon that AI innovation will consciously hit a ceiling. Much like the lightbulb.

When the lightbulb was initially invented, the filament material in the lightbulb would burn for years at a time. This meant that once someone owned a lightbulb there wouldn't be any need to buy another one. If there’s no demand, the lightbulb companies would go out of business.

So these lightbulb companies came together and entered into an agreement where they decided that they would invest resources in making sure that the filament would burn for less and less time as time went by. This would mean that users would continually need to change their lightbulbs and the companies would stay in business.

These companies effectively cartelized the slowing down of innovation.

I reckon something like this might happen with AI.

In the ideal world, all jobs are done by AI leaving humans to chill and relax and focus on “creative endeavors” as Naval put it. But once that ceiling is hit, humans would be rendered ineffective. Especially the people who aren't as tech-savvy and who don't have access to technology.

Because AI would become so effective, companies will decide to scale innovation down just so humans can still get up and go to work. And feel like they’re important. That’s the thing about us humans. We want to feel needed. To be felt important

It’s also worth noting that the results of AI will eventually hit a median - because it will only surface patterns that have “survived” due to survivorship bias. The results will be the “average” of all “successful” ideas and that's where the problem lies.

The same patterns will be created over and over again by AI and resurfaced because that is what is perceived to be “valuable” by the masses so nothing original won't ever be created.

So if new value is to be created, it’s humans who would have to do the work.

Unless at some point, the AI begins to consolidate ideas from all brains and create such connections that cant even be processed by a single human mind. But that day seems a bit far for now.

Nevertheless, we should be mindful of using technology - especially something as powerful as AI.

Being in the creative field myself and owning a copywriting and design studio, I look at AI as a tool in the short term to augment my skills and reduce the costs of serving a single customer.

To that end, if you’re a SaaS founder and you wanna revamp your landing page/sales page for your BlackFriday Sale, Christmas Sale, or whatnot, then there are 4 ways in which I can help you.

Option 1: Get a complete copy and design overhaul for your landing page complete with your brand colors, guidelines, and whatnot. (1000$)

Option 2: Get a lo-fi wireframe for your landing page. You want a fresh design wireframe along with a copy to revamp so that the two play nice with each other. (750$)

Option 3: Get a before-after version of any existing landing page of your choice. If you’re happy with your existing designs and just want the copy changed, then this is perfect for you. ($500)

Option 4: Get a UX copy/design audit of your landing page - things you need to fix, elements you can optimize, design + copy tweaks you can make. You get the idea. (100$)

Well, that’s it for today’s issue. I’ll come back to your inbox soon! Until then have a great day and enjoy your upcoming holidays!

If you liked this essay, consider sharing it with your nerdy/geeky/passionate/skeptical/ AI friends :)